7 Steps to Fast SPARQL Queries

Get the latest in your inbox

Get the latest in your inbox

You want SPARQL queries in Stardog to be as fast as possible. And we know all the secrets. All you have to do is read.

In this post I’ll teach you the 7-step process to writing fast SPARQL queries in Stardog. Let’s get started.

At the core of Stardog lies the query optimizer whose mission is precisely to achieve optimal performance on every input query. The optimizer may rewrite a query into a form which is more efficient to execute but gives all the same answers. Sometimes, however, it may require a little help from the user to find the most efficient query execution plan.

Understanding query plans is key to addressing query performance issues. So go read my blog post about Stardog query plans and I’ll wait.

The first question is—why are we writing a blog post on guiding users how to write efficient queries instead of working on our optimizer so that every query is efficient?

Wouldn’t it be nice if every hard or poorly written query were automagically turned into a fast query? Yes. It would. And on everyone’s birthday there should be a 🌈.

But there are a few complications standing in the way of our beautiful dream. These are general database challenges.

First, the optimizer only performs transformations which preserve the semantics of the original query. It is possible, for example, to write a query whose result set explodes on any non-trivial database. There’s nothing that the optimizer can do about it (other than give insights through the query plan).

Second, some datasets contain complex correlations which are not captured in statistics computed by Stardog and available to the query optimizer. It is generally infeasible to perfectly estimate selectivity for an arbitrary subset of a SPARQL query to plan it optimally.

Third, statistics may be stale if the database is under constant write pressure.

There’s the server option index.statistics.update.automatic which governs when

statistic should be re-computed after updates. However it’s possible that some

update is not large enough to re-compute the statistics for the entire database

but touches enough of the data to make the statistics inaccurate for a

particular query. You can always force re-computation via the stardog-admin db optimize CLI command.

Finally, even if the statistics were always available for any subset of the query, it wouldn’t be possible to find the optimal plan in all cases and in reasonable time. Finding the optimal join tree for a conjunctive query is a well-studied computational problem and is known, alas, to be NP-hard.

While efficient algorithms and heuristics exist and we never stop working on them, Stardog cannot guarantee the optimal execution plan for every query because no database (that’s sufficiently expressive) can. Anyone who promises otherwise is not to be trusted.

To avoid this post being too long, you can read more about the subject of avoidably slow queries [here](/blog/7-steps-to-fast-sparql-queries/2/).

So your query runs slower than acceptable; you’ve verified that it’s written as intended; and you cannot make it more specific. What now? Well, there’s no silver bullet. Don’t waste time or mental energy hunting for a silver bullet.

The following recipe is the secret heart of Stardog query optimization, which we’ve followed consistently for years.

For simplicity we restrict our attention to basic graph patterns (a.k.a. BGPs), i.e. sets of triple patterns.

count(*) in projection instead of SELECT *.group.joins in StardogWe’re working on a query performance analysis tool to automate this “guess’n’check” procedure. Stay tuned.

Let’s illustrate this on the following query which identifies deals made by an employee already involved in the same matter as a customer that’s listed in that deal.

This is a greatly simplified example of a typical query to detect potential frauds where the data has hidden relations between people involved in the deal. Typically such queries have many BGPs (sometimes dozens of triple patterns) and it’s not as easy to identify the problematic parts, but the general idea remains the same.

SELECT * WHERE {

?deal a :Deal ;

:employee ?employee ;

:customer ?customer .

?employee :name ?employeeName ;

:involvedAsEmployee ?matter .

?customer :name ?customerName ;

:involvedAsCustomer ?matter .

}

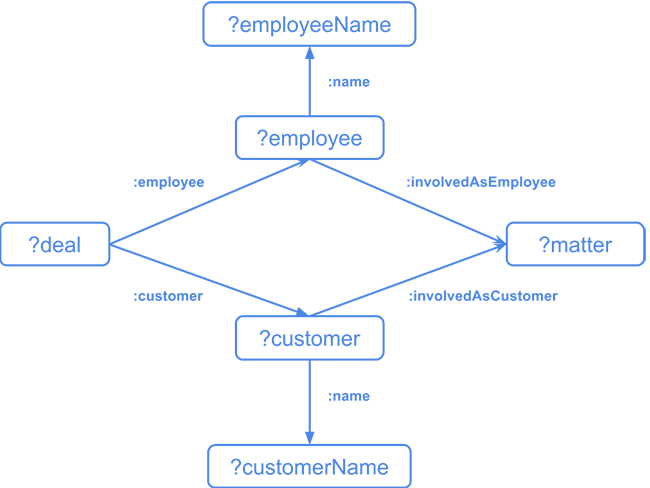

If we look at the graphical representation of this query, we’ll see the following diamond structure:

Stardog’s optimizer will detect that the :name relation is functional, which

means the joins with the patterns ?employee :name ?employeeName and

?customer :name ?custName should not create trouble.

The key planning question here is which join—on the ?deal or the

?matter—should be evaluated first? The answer depends on the data: if the

deal join is selective it should be done first, or if few people were involved

in the same matter, the matter join should be done first.

This is exactly the kind of decision-making that the optimizer is responsible for. In the ideal world, it’ll decide based on statistics and will decide correctly. We don’t live in the ideal world, though, so in some situations (outdated statistics or complex inter-dependencies in the data) it can make a wrong choice.

The deal join is a join on the subject variable while the matter join is a join on the object variable. Stardog has better statistics about the former because star-shaped n-ary join queries are common.

So it’s possible that there are many deals and the optimizer decides to first find people which were involved in the same matter, but it turns out that some matters in the past involved many people. So that join is not selective and the optimizer made a wrong choice. We’re now at the step 3 of the algorithm above.

First, let’s look at the query plan:

Projection(?deal, ?employee, ?customer, ?employeeName, ?custName, ?matter) [#500]

`─ MergeJoin(?customer) [500]

+─ MergeJoin(?customer) [#1000]

│ +─ Sort(?customer) [#1000]

│ │ `─ MergeJoin(?matter) [#1000]

│ │ +─ Scan[POSC](?customer, :involvedAsCustomer, ?matter) [#100000]

│ │ `─ Scan[POSC](?employee, :involvedAsEmployee, ?matter) [#100000]

│ `─ Scan[PSOC](?customer, :name, ?customerName) [#1000000]

`─ Sort(?customer) [#1000000]

`─ MergeJoin(?employee) [#1000000]

+─ Sort(?employee) [#1000000]

│ `─ NaryJoin(?deal) [#1000000]

│ +─ Scan[POSC](?deal, <http://www.w3.org/1999/02/22-rdf-syntax-ns#type>, :Deal) [#1000000]

│ +─ Scan[PSOC](?deal, :customer, ?customer) [#1000000]

│ `─ Scan[PSOC](?deal, :employee, ?employee) [#1000000]

`─ Scan[PSOC](?employee, :name, ?employeeName) [#1000000]

We can see that the results of the join on ?matter are sorted

(in memory, with possible spilling to disk)

because the optimizer thinks there are only around 1000 solutions to sort. This

looks like a potential culprit behind poor performance. We can test this

hypothesis by running this join in a dedicated query (step 4):

SELECT (count(*) as ?count) WHERE {

?employee :involvedAsEmployee ?matter .

?customer :involvedAsCustomer ?matter .

}

Note: running a query with count(*) is typically faster than retrieving all

bindings sets and counting them on the client side.

When this returns a lot of results, we know it’s a performance problem. The question is whether it returns, say, an order of magnitude more results than the estimation in the original plan. If this is the case, the optimizer made its join order choice based on inaccurate information and there may be a better plan in which this join is deferred. Assuming that’s what we see, we move to #6 (otherwise we look at other potentially problematic joins in the plan and test them separately).

Step 6—tearing apart problematic queries—is where query hints in Stardog 5

make your life easier. In earlier versions of Stardog, you’d have to put the two

patterns above in different subqueries but now the group.joins hint will

suffice:

SELECT * WHERE {

{ #pragma group.joins

?deal :employee ?employee .

?employee :name ?employeeName ;

:involvedAsEmployee ?matter .

}

{ #pragma group.joins

?deal :customer ?customer .

?customer :name ?custName ;

:involvedAsCustomer ?matter .

}

?deal a :Deal .

}

This hint tells the optimizer to first compute the join trees for the respective

scopes (three patterns in each) and only then join them together. There are two

reasons the query can now run faster: first, not all people involved in some

earlier matters may co-occur in deals (as customers or employees); second, now

the join is on two variables (?deal and ?matter) which gives the query

engine extra opportunities to prune intermediate results.

Be warned not to introduce disconnected scopes in this way, i.e. it should be possible to create a join tree where each join has a join condition (i.e. a shared variable between two patterns). If not—that is, if a scope has two or more disconnected groups of patterns—evaluation would have to compute their Cartesian product, which is typically slow. Cartesian products are computed using loop joins so keep an eye out for those generally.

While analytic queries are indispensable to some applications, others often require running specific queries which fetch information related to particular nodes in the graph. Such queries are typically much easier for Stardog since it can quickly filter our irrelevant information by pushing the selective patterns deep into the plan and evaluating them early in the execution. It is almost always better to include selective patterns in the query rather than doing filtering on the client side.

There are at least four simple ways to make a query specific:

VALUES ?var { :x :y ... } construct in SPARQL to statically bind

the variable to a constant.BIND(:x as ?var) construct (but make sure to put this before any

other occurrence of ?var in the same scope).FILTER(?var = :x) or FILTER(?var IN :x, :y, ...) but keep in mind the

subtle difference between equality and identity discussed above.

Stardog 5 includes optimizations to push such selective patterns into nested

scopes, e.g. UNIONs, so there is generally no need to manually work through

all occurrences of a variable to bind it to a constant.

SPARQL optimization is a hard problem. We think of slow queries in two buckets: poorly written queries and poorly planned queries. The former should be detected and fixed by the user since they typically don’t capture the actual intention.

The latter require some joint effort from both us (Stardog developers) and the user: we promise to keep improving the optimizer, from more advanced statistics to learning from past mistakes. In the mean time you can try a couple of the above tricks to help the optimizer.

Finally, you never need to ask us whether we want to see your slow query and the data to reproduce a performance issue. We always do.

Just send it over (including in an obfuscated form to preserve privacy) and we promise to take a look. Lots of improvements to Stardog’s query optimizer have been made in response to user feedback and we’ll continue to work hard to ensure that your queries run as fast as possible.

Get started with Stardog - free versions available in the cloud or to download.

How to Overcome a Major Enterprise Liability and Unleash Massive Potential

Download for free