Safety RAG: Improving AI Safety by Extending AI's Data Reach

Get the latest in your inbox

Get the latest in your inbox

Today we introduce Safety RAG (SRAG), the underlying GenAI application architecture for Stardog Voicebox. Our goal is to make Stardog Voicebox the best AI data assistant available to help people create insights fast and 100% hallucination free.

Hallucinations are bad for business and threaten AI acceptance (see here, here, here, here, here, here, here, and here). Enterprise GenAI, especially in regulated industries like banking, pharma, and manufacturing, needs a corrective supplement to RAG-and-LLM, which alone is insufficient to deliver safe AI. SRAG takes the “data matters more than AI algorithms” rubric seriously and literally. Safety RAG is more safe than RAG because it’s intentionally designed to be hallucination-free, and it’s a more complete data environment for enterprise GenAI. SRAG extends AI’s data reach from documents-only (RAG) to databases and documents.

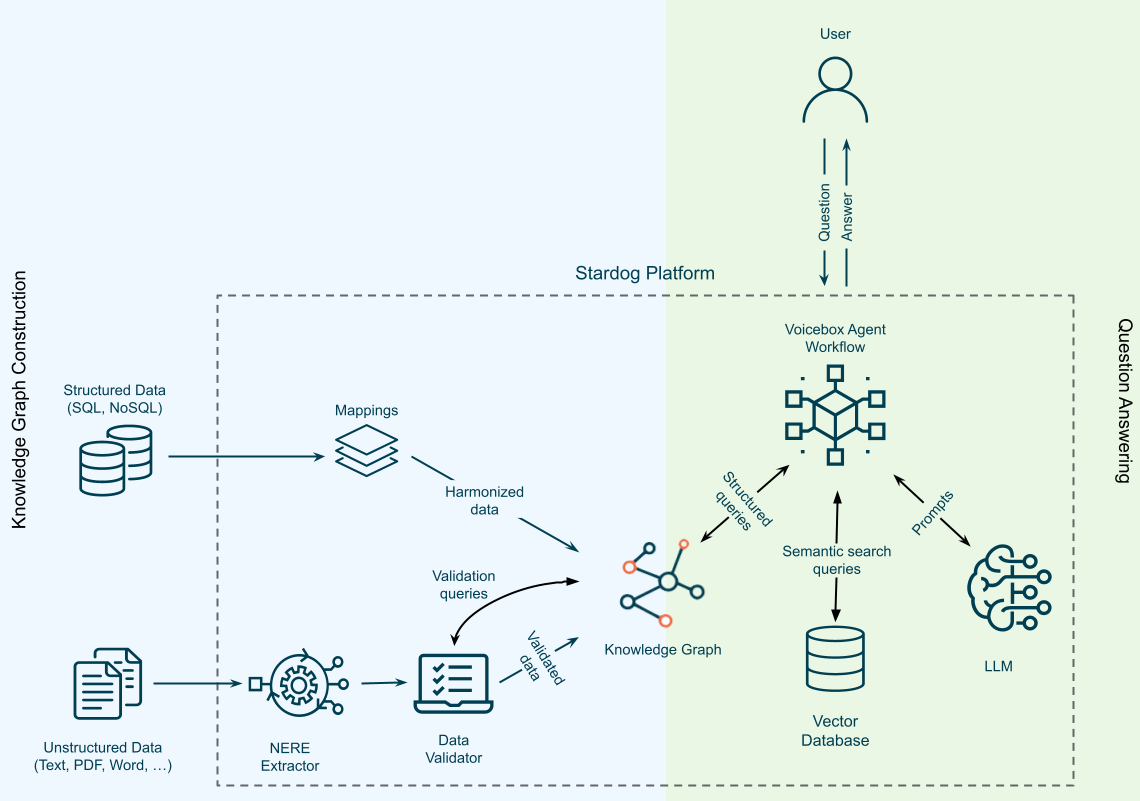

In this post we’ll discuss, first, why hallucinations make RAG-with-LLM unsafe for high-stakes enterprise use cases; you can find more detail in our LLM Hallucinations FAQ. We take a deep dive, second, into Safety RAG: its reference architecture; how SRAG handles document-resident knowledge (DRK); how SRAG uses records-resident knowledge (RRK) to ground LLM outputs; and, then, how SRAG can make RAG-with-LLM apps safer. Third, we take a glance at the novel agent workflow pattern that Voicebox and SRAG use internally.

There are two obstacles with the dominant GenAI application pattern in enterprise settings:

Safety RAG addresses both of these issues by seeing them as two sides of the same coin.

The basic idea of SRAG is to complete GenAI’s reach into enterprise data by unifying enterprise databases into a knowledge graph and then using that knowledge graph with Semantic Parsing to ground LLM outputs, thereby eliminating hallucinations. SRAG is fundamentally premised on bootstrapping a KG from databases and then using that KG to filter hallucinations that occur when LLM extracts knowledge from documents.

RAG trusts LLMs to give users insights about the world; RAG augments raw LLM output with some contextualized enterprise data extracted from enterprise documents only. SRAG via Voicebox uses LLM to understand human intent but gives users insights by querying a knowledge graph that is (1) hallucination free and (2) contains knowledge derived from enterprise both databases and documents.

LLMs are the source of hallucinations. LLMs used internally inside SRAG systems absolutely will hallucinate like every other LLM; but SRAG systems are free of hallucinations if they never show users ungrounded LLM outputs. Hallucination-free is a software application property, not a LLM property.

Safety RAG grounds LLM outputs in enterprise knowledge graph to filter hallucinations before they can do harm to users.

We can see the main components of SRAG. On the left we have enterprise data sources grouped into two types: first structured and semi-structured; second, unstructured.

Structured data sources can be SQL or NoSQL databases and Voicebox’s automation services map them to a unified and harmonized graph data model. Stardog Designer also uses Voicebox to customize graph data models based on Knowledge Packs that we’ve developed for key use cases in targeted verticals, largely in partnership with Accenture and other alliance partners. Voicebox helps to semi-automate this process where users can verify mappings generated by Voicebox.

Unstructured data sources can be any kind of document with text content like PDFs or Word documents. Voicebox uses customized LLM, agents, and specialized data extraction transformers to extract Named Entities, Events and Relationships (NEER) from text content. Of course this LLM-powered extraction model will hallucinate or otherwise generate incorrect data.

But that’s okay because these are intermediate outputs and won’t be shown to Voicebox users without further processing. Raw extraction results are validated using structured data unified in the knowledge graph. This process is called grounding and it’s the reason that AI safety is connected to AI data reach.

For example, if a relationship between two entities has been extracted from a document, then the first step of validation is to locate the corresponding entities in the graph. Then the relationship type is located in the KG and the matched entities are checked if they satisfy the typing constraints for the relationship. There may be other constraints associated with the relationship, e.g. a uniqueness constraint, that will also be used for validation. If any of these validation steps fail the extracted information isn’t ingested and is rather categorized as potentially unsafe and is then available for additional processing, including curation or supervised verification.

At the end, we have a knowledge graph whose backbone is the structured data sources within the enterprise that has been augmented and contextualized and in some cases completed by safe and verified information from unstructured data. When a user asks a question, Voicebox will use LLM to perform Semantic Parsing; that is it will convert the user’s natural language question into one or more structured queries over this KG.

Stardog Voicebox uses several agents to accomplish the job of being a fast, safe AI Data Assistant, which also means leveraging lots of LLMs, not just one. A brief overview of the agent-powered “jobs to be done” includes:

These agents rely to greater or lesser degree on LLMs, which can hallucinate while generating tokens, but these hallucinations can be managed because Semantic Parsing means that Voicebox is generating logical language expressions that are validated and executed by the Stardog platform. So if hallucinations occur in intensity or amount beyond what Voicebox can repair, in the SRAG worst case failure mode, Voicebox fails to generate a valid query and tells the user that it cannot answer.

Sometimes safety requires saying “I don’t know”. That’s real AI safety: help the human user by augmenting what they know and, when that can’t happen, then do no harm.

There’s more about Stardog Voicebox’s novel approach to agentic workflows for LLM in The Agentic Present: Unlocking Creativity with Automation.

Our motivating design assumptions and intuitions for SRAG include the following:

Our focus is fast, accurate insights from data through conversations with Voicebox, the AI Data Assistant. SRAG is carefully designed to deliver that value to our customers and users. Better insights are a function of user’s being able to access all the relevant data and that’s what SRAG does.

How to Overcome a Major Enterprise Liability and Unleash Massive Potential

Download for free