The Agentic Present: Unleashing Creativity with Automation

Get the latest in your inbox

Get the latest in your inbox

Stardog Voicebox agents leverage Stardog’s knowledge graph platform to serve as a fast, accurate, and hallucination-free AI data assistant on users’ behalf—which requires them to interpret queries, navigate entity relationships, and compose meaningful responses, among many other tasks. The result? A faithful adherence to the facts that matter most in high-stakes decision-making.

The importance of hallucination-free GenAI cannot be overstated, particularly in industries where accuracy and trust are key. By anchoring agents in Stardog knowledge graph’s ground truth, Voicebox ensures that the answers it gives users are reliable, verifiable, and safe. This commitment to factual accuracy increases user confidence and allows for the safe deployment of GenAI in regulated domains.

Recently we introduced Safety RAG, our approach to building GenAI apps that are 100% hallucination free, and a critical part of what makes Voicebox a fast, accurate, and safe AI data assistant.

In this post we’ll focus on the way we use LLM agents inside Voicebox to unleash human creativity and agency through the power of automation.

In the realm of LLM app development, agents have emerged as a powerful paradigm for extending the capabilities of language models. Andrew Ng talked recently about four agentic workflow patterns he sees most often:

LLMs can be planners that invoke tools for world building. They invoke these tools—three most common: search the web, call a Python callable, open an HTTP port to REST—as tools and as such. This is very expressive but it also makes it very hard if not impossible to validate the actions of the LLM which is crucial in many settings especially in regulated environments.

Our approach to agentic workflow, in distinction from Ng’s above, is to focus on

Let’s examine what this means in more detail.

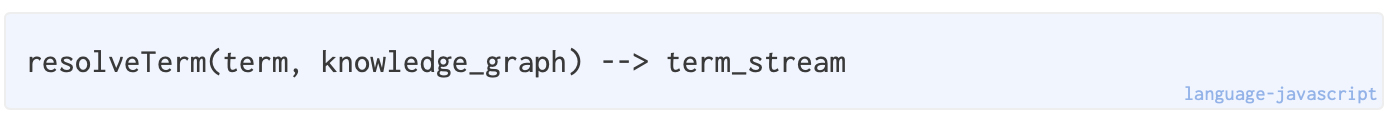

We call our agentic workflow pattern term resolution. Rather than equipping agents with a range of tools to invoke, we treat LLM-powered agents as more limited, with a single callable, conceptually:

These agents interact with a knowledge graph that’s full of data and domain logic named by terms; that is, hierarchical, recursive symbols defined in terms of other symbols and data primitives. We think of this as a “term space”. The primary interaction between a person and this term space is via LLM-powered agents that are chiefly concerned with term resolution. We can think of natural language as a stream of terms to be resolved.

In contrast to a RAG system, Voicebox is built on Semantic Parsing, which is why Voicebox resolves natural language into logical expressions defined by one or more regular languages: SPARQL, integrity constraints, datalog rules, etc. A question from a user is converted into equivalent query—or set of queries plus other languages as provided by the knowledge graph services described below—and eventually returns a stream of tokens, i.e., more natural language that constitutes an answer to the user’s question and, when things are working correctly, some insight into the world hence the user’s job to be done. When an agent encounters an unknown term, it recursively resolves it by breaking it into constituents, resolving each until reaching primitives or known terms. Complex banking concepts like TradeCounterparty may be decomposed into Trade and Counterparty, and, so on, until grasped, that is, until a resolved expression composed of logical languages or data primitives.

By “subsumption layer” we mean an abstraction layer where—given A→B→C—everything that C includes is included in B and everything B includes is included in A. This works for both term resolution by agents, that is, converting “What is the largest single order placed by a customer last quarter in a country that’s currently oil-embargoed by the Saudis and dependent on Venezuelan crude” into a knowledge graph query, comprised in turn of stored queries, class and property unfolding via logical inference and, say, integrity constraints, as well. But the ideal of term resolution also can be applied to the system itself: in this case A is the Voicebox UX and B, C, etc are the deep knowledge graph capabilities of Stardog. Voicebox is a UX, of course, but it’s also in some deep sense the root of a tree of term resolvers, that is, LLM-wrapped agents.

While the visible interface of Voicebox is plain text inputs and hypertext (and other) outputs, the capabilities of the underlying Stardog native knowledge graph platform are accessible to users via Voicebox, plain language inputs, and an agent-powered service layer that works behind the scenes on the user’s behalf. Some of those services are depicted in architecture diagram above (and others are not, for concision’s sake) and include:

Suppose a user asks Voicebox the following question:

Show me the European counterparties involved in high-risk trades with a notional value over $1 million in the last quarter, excluding any counterparties based in non-EMEA countries subject to sanctions.

As we described in the Safety RAG introduction, inside of Voicebox various agents operate with different responsibilities. Behind the scenes, these Voicebox agents:

In this example, Voicebox demonstrates its ability to handle complex, multi-faceted questions by leveraging the power of Semantic Parsing and Safety RAG and the underlying knowledge graph. It applies constraints, inference rules, and graph queries to provide accurate, fast, and 100% hallucination-free answers to the user’s question.

Currently Voicebox is really good at answering questions that can be expressed as a structured query using the currently defined terms leveraging the agent workflow. The next step is to integrate long-running, asynchronous processes into the agent workflow to derive more, deeper insights from data. In other words, Voicebox agents currently are read-only and gather insight from the knowledge graph without modifying it. We are working on wrapping our data services—which are non-trivial and growing—with agents and then teaching agents to derive new insights on users’ behalf by running semi-autonomously so they can enrich the knowledge graph with additional information.

Why? Because humans are the only source of value in the universe. Because it’s given to us to visit this place for a time that’s too short by any measure. Because life is too short to do something by hand that a computer can do for us better, cheaper, faster. The best AI is an augmentation of human creativity; an unleashing of our latent powers to create. Finally, we’re pursuing an agentic future because unlocking our ability to connect the disconnected bits of the world to create non-linear value is why we built Stardog.

How to Overcome a Major Enterprise Liability and Unleash Massive Potential

Download for free